Creating Sparse, Multitask Neural Networks

A new machine learning framework for sparse multitask models.

By Ian Sotnek and Jacob Renn @ AI Squared

The use of Machine Learning (ML) has become increasingly central to the acquisition and interpretation of data. As improved methodologies for training ML models are created, the performance of models trained with these methodologies are inevitably compared to the field’s moonshot: Artificial General Intelligence. However, today’s models fall far short of the ability to generalize robustly on multiple tasks, let alone be fully generalizable.

To address the shortcomings of modern ML architectures, we’ve turned for inspiration to the human brain. Specifically, we sought to emulate the sparse, hierarchical structure of the human cortex, which enables the flexible learning and multitask performance that we humans are lucky enough to possess. The model architecture we created, which we’ve named Multitask Artificial Neural Network (MANN, for short), combines this biologically inspired structure with recent advancements in sparse neural network architectures to yield models capable of addressing the most pressing shortcomings of state-of-the-art ML.

Two major shortcomings of modern ML solutions that MANN alleviates:

- Modern ML methods can be really, really great at one specific, narrow task. Robustly learning multiple similar or disparate tasks within the same model, however, is currently a limitation due to catastrophic forgetting — as a model learns a new task, the internal state representation of the previous task is degraded, making robust multitask performance impossible. MANN isolates task representations into separate subnetworks within the larger network, preventing the acquisition of new tasks from interfering with previously learned tasks.

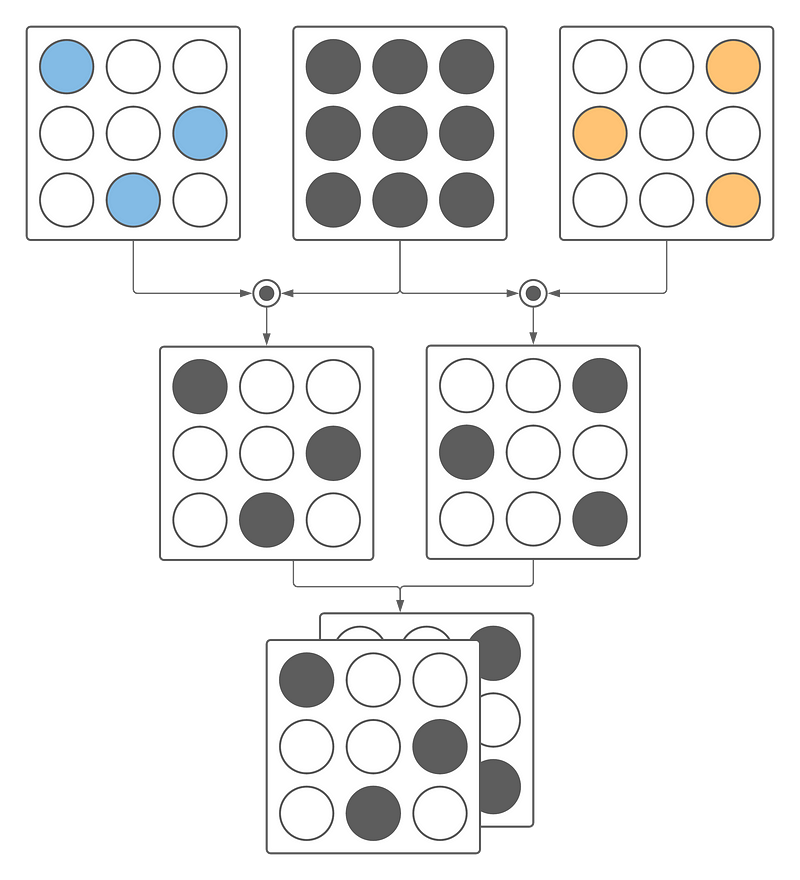

- When shooting for cutting-edge performance, some architectures can balloon to the 100’s of billions of parameters. These models can be dense and inefficient, and as a result only able to run on high-end hardware. MANN utilizes our custom pruning technique, Reduction in Sub-Network Neuroplasticity (RSN2), which eliminates all the parameters not directly needed for the performance of a given task. We then combine the sparse representations of each task included in the model into a sparse tensor, as shown in Figure 1. The overall benefit of MANN with respect to computational resources is that you can eliminate up to 90% of the model size without losing any predictive performance, enabling it to perform as well in resource-constrained environments as in a data center.

Installing MANN

The MANN package is pip-installable via PyPI here. If you have an M1 Mac, please use this package to ensure compatibility with your hardware.

Trending AI Articles:

1. Why Corporate AI projects fail?

2. How AI Will Power the Next Wave of Healthcare Innovation?

3. Machine Learning by Using Regression Model

4. Top Data Science Platforms in 2021 Other than Kaggle

Running MANN

Say you want to create an application that scans webpages looking for items of clothing and rates them according to the reviews provided by people who’ve bought that item. By combining a fashion recognition task and a sentiment analysis task within the same model, you would halve the complexity of integrating both tasks into your application!

We’ve created a sample notebook to walk through a use-case example of creating a sparse model that learns both a computer vision task (image classification) and a natural language processing task (sentiment analysis) to give you an idea of how our libraries can be employed in this example use case. The notebook can also be accessed here.

Update 07/2022: You can now read more about our methodology in our Arxiv preprint

Don’t forget to give us your 👏 !